Problem: Filtered listings on the web frontend were taking a long time (22-25 seconds) to load.

Analysis: Identified inefficient database queries as the culprit. Specifically, tables lacked proper indexing, causing the database to scan through a large amount of data unnecessarily when filtering was applied. Joins with filtering conditions were especially slow because the primary key alone wasn’t enough to optimize the search.

Solution:

- Added required indexes: Created indexes on the columns used for filtering. Indexes act like an organized filing system for the database, allowing it to quickly locate relevant data based on the filter criteria. This significantly reduces the amount of data the database needs to scan.

- Combined partitioning with the filter field and primary key: Partitioning is a technique that divides large tables into smaller, more manageable chunks. By combining partitioning with the filter field and the primary key, you further optimized the search process. When a filter is applied, the database can quickly identify the relevant partition(s) containing the filtered data, reducing the search scope even further.

- Few code changes: When analyzed in detail found that in this particular scenario the concerned situation demanded only to fetch those records related to the currently logged in user. The original developers had used a join statement with the user_master and condition was user name. But the userid (int ) was already in the session, so just tweaked to remove the join statement and use the userid from the session to filter on the single table with userid = ‘xx’ condition.

Result: These optimizations led to a significant improvement in performance. The filtered pages now load and render in just 4-5 seconds, which is a massive improvement from the original 22-25 seconds.

Percentage decrease in loading time:

- The average of the original loading time range: (22 seconds + 25 seconds) / 2 = 23.5 seconds

- The difference between the original and optimized times: 23.5 seconds – 4.5 seconds = 19 seconds

- Divide this difference by the original average time and multiply by 100% to arrive at a percentage: (19 seconds / 23.5 seconds) * 100% = 80.85% (approximately 81%) decrease in loading time.

Percentage increase in loading speed:

- Calculate the improvement by dividing the original average time by the optimized time and multiply by 100%: (23.5 seconds / 4.5 seconds) * 100% = 522.22% (approximately 522%) increase in loading speed. This is absolutely insane and mind-blowing.

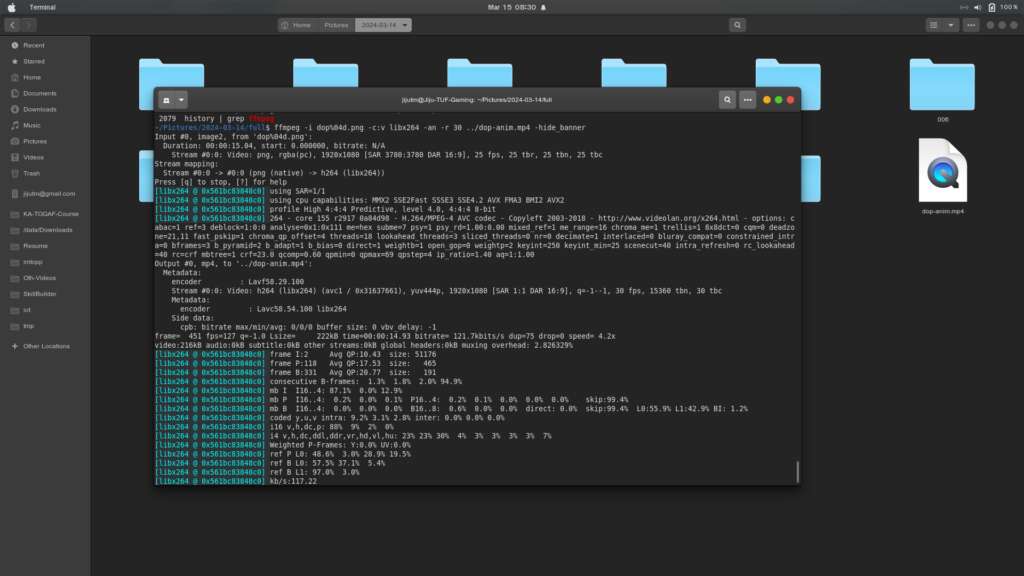

The anonymized captures of Firefox developer tools network performance analysis is added.